Self-Hosting your own Docker Hub

Posted on October 13th 2020

Why would you bother hosting your own image registry?

Docker Hub is extremely convenient but the recent restrictions that were applied to the Docker Hub got me thinking that my homelab was over reliant on the Hub for container images. If the Docker Hub was ever unavailable it would make it very difficult for me to deploy some of my services. I also use a few images as part of my CI pipelines that do not change very often so the 6 months for inactive images would cause issues for a number of my CI builds. I can completely understand why they are having to apply these restrictions - storage is expensive especially when you are talking about 15 PETABYTES of images.

From a security point of view I'd have to imagine that the Docker Hub is a major target - people pull images from there and run them without ever knowing whats actually in the images. Docker Hub has had a least one security issue recently with unauthorized access to their database. This really is an attackers dream especially with the thousands of people running docker-compose setups with services like watchtower which automatically upgrade their running containers without any user interaction. I like a bit more control than that especially for services running within my home network. Hosting my own image registry and building my own images gives me a better sense of control. This also gives you a bit more visibility of what actually goes into the different applications that you are running which I think can only be a good thing. Vulnerability scanning using tools such as Clair can also be added to image build pipelines which will give you a good view of the vulnerabilities present in the services that you run.

I am running the majority of my services on a Kubernetes cluster so having a local image registry makes it much quicker to deploy pods and move them between nodes as the they no longer have to pull images from over the internet. The pods spin up a lot faster when using images from the local registry versus images pulled from Docker Hub. A private image registry is also very useful for personal projects and CI build images that are used in my CI flows. For example the image for this website is built using Gitlab CI and hosted in my private image registry. In the case of my CI build images - a lot of these could lapse the 6 month inactive image limit introduced by the Docker Hub team which would lead to build failures in my pipelines. A local image registry also means that the majority of my Kubernetes nodes do not require an outbound route to the internet so if there are any issues with my internet connection, I will still be able to deploy services like Jellyfin and Duplicati.

These are just some of the reasons why I believe its worth spinning up your own image registry. I know it won't be for everyone but it works well for me.

My Setup

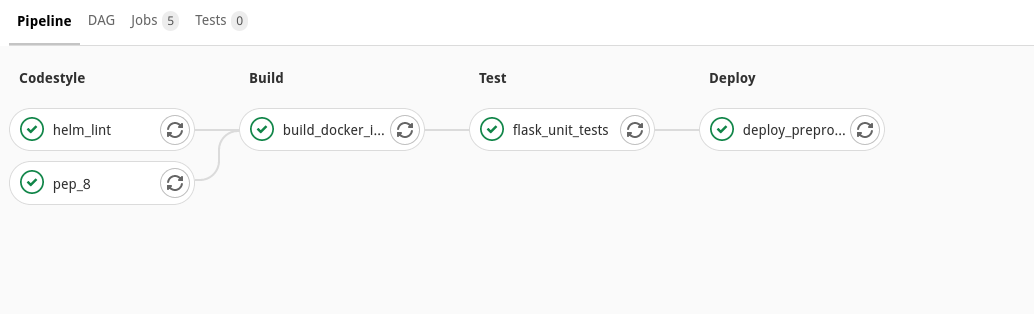

Its a pretty simple setup overall. Currently all of my git repos are on a self-hosted Gitlab instance so it made sense just to use the image registry that comes with Gitlab. If you are running the omnibus Gitlab installation - deploying the image registry just requires a couple of updates to the gitlab.rb config file and running gitlab-ctl reconfigure - Chef will take care of the rest. The Gitlab documentation is pretty good on this so you can check that out here. Most of my git repos have automated builds using Gitlab-CI with gitlab-runners deployed in my Kubernetes cluster. I use kaniko and podman to build the images which are great because they do not require root privileges. These images can be easily pushed to the registry using the Gitlab CI variables that are already available to the build containers. These CI variables are very handy and allow you to include an image build in the .gitlab-ci.yaml file that is just three lines long. Below is a simple example of an image build job using kaniko:

script:

- mkdir -p /kaniko/.docker

- echo "{\"auths\":{\"$CI_REGISTRY\":{\"username\":\"$CI_REGISTRY_USER\",\"password\":\"$CI_REGISTRY_PASSWORD\"}}}" > /kaniko/.docker/config.json

- /kaniko/executor --context $CI_PROJECT_DIR --dockerfile $CI_PROJECT_DIR/Dockerfile --destination $CI_REGISTRY_IMAGE:$CI_COMMIT_TAG

The majority of the services that I run at home can be built by simply cloning the repo from github and running a kaniko build against the Dockerfile. There are a few that might be a bit more complicated but its always fun trying to figure out how they build their images for the Docker Hub. Once the images are built, I am able to apply the new images straight to my Kubernetes cluster automatically using the gitlab-runners and helm. Upgrades are now just one button away for the majority of my services.

If Gitlab is a bit too heavy for you, a similar setup could be achieved using services such as Gitea, Jenkins and an image registry like Harbour or Docker's self-hosted registry. Gitea is quite a bit lighter than Gitlab if you are stuck for resources. Harbour is the image registry that was backed by the CNCF and is supposed to be very good. I was going to roll out Harbour but it was far more convenient to go with the Gitlab registry. One thing I would recommend is getting yourself a TLS certificate for your image registry. Trying to pull images from an insecure registry can be a bit of a pain and requires some further configuration. This configuration would have to be applied multiple times if you are using ephemeral containers to build your projects. You really don't want to go down the insecure registry route.

I have another post which will give you a much better picture of my homelab setup and the different services that I run - https://www.careyscloud.ie/homelab2020. It is still pretty accurate but there has been a good few changes in the meantime as I've spent a lot of time at home in 2020!