Homelab 2020

Posted on January 11, 2020

TLDR - Four node Kubernetes cluster running a lot of the usual homelab suspects with some spices on top.

There was never really an aim to start a homelab - it just happened over time. I do highly recommend it though for anyone starting off in the tech industry. You can learn so much by just playing around in a lab without having to worry about breaking anything like you would in work. The barrier for entry is pretty low these days too thanks to the reasonably cheap Raspberry Pis. You'd be surprised at the amount of different services you can run on a Pi4 especially on the 4GB model. Another great thing about having a homelab is that you can start to reduce your reliance on public cloud based services since you are basically running your own cloud services at home..

One mistake I made was not documenting any of the different stages or progressions along the way. One of the main reasons for this blog post is so that in 2030 I can look back at the progress that has been made in both the hardware and software of my little homelab. Little being the keyword here - this is not one of the massive enterprise server racks that seem to pop up on r/homelab daily. I've tried to keep the footprint small and the power consumption low. I'd love a full server rack for something like an Openstack deployment but my tiny apartment won't allow me and I can't really justify it to myself since my current setup handles my workload pretty comfortably..

The Hardware

HPE Gen 10 Microserver - The Always On Box

|

|

Dell OptiPlex 3060 Micro Form Factor - The "Compute" Node

|

|

Asus Vivo PC VM62 - The Runt of the Litter

|

|

|

A few Raspberry Pi 3b+s, 1 Raspberry Pi 4 (2GB) & 1 Raspberry Pi Model B+

|

|

NETGEAR GS308 8-Port Gigabit Ethernet Switch

Ubiquiti Unifi AP AC LITE

The Backbone

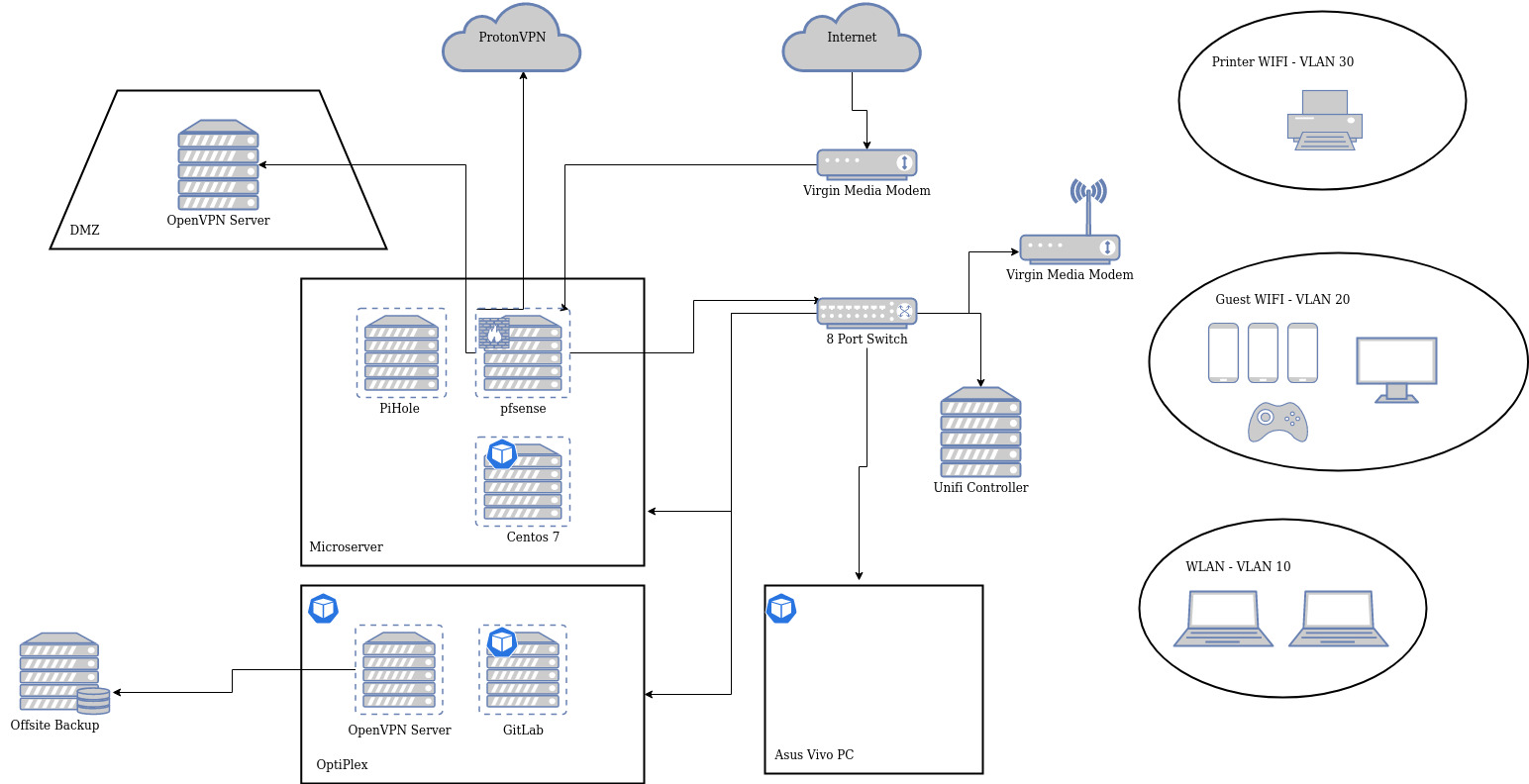

It turns out network diagrams are hard and require a bit of patience... but here is my attempt at drawing out my home network:

There are two physical networks - the LAN and the DMZ. Most of the machines reside within the LAN while there is a lonely Pi 3b+ sitting out in the DMZ. I share a handful of the lab services with a couple of friends who access them through the DMZ Pi. The three wireless networks are provided through the Unifi AP AC LITE and are all separated with their own VLANs. The Unifi AP does a great job at delivering WiFi throughout the entire apartment. Devices that shouldn't require access to the internet all go into the printer wireless network which doesn't have a route out. Firewall, Routing, DHCP etc. are all handled by pfSense apart from DNS which is the responsibility of a PiHole VM. A secondary PiHole is running on an old Pi Model B+ which has been going for years now so it would be a shame to turn it off now.

What's running?

You will probably be familiar with the majority of these services but for anyone who isn't awesome-selfhosted on Github is a great place to start. Theres a massive amount of information there so just search for which ever ones catches your interest.

- pfSense (VM)

- PiHole (VM & Model B+)

- Unifi-Controller (3b+)

- OpenVPN servers (VM & 3b+)

- Prometheus

- Grafana

- Jellyfin

- Jackett

- Radarr

- Sonarr

- Bazarr

- Ombi

- Nextcloud

- Transmission

- Traefik

- Nginx-Ingress

- Gitlab (VM)

- Tinode

- Monica

- Searx

- Gitlab-Runner

- Code-Server

- MongoDB

- MariaDB

- Matomo

- Wordpress

- MetalLB

The Cluster

Most of these services run on a four node Kubernetes cluster which consists two bare metal nodes and two virtualized nodes. I've marked the nodes in my diagram with little blue pods in the top left corner. Each of the services in the cluster are deployed and managed using Helm which is the package manager for Kubernetes. The Helm charts used for deployment are stored in GitLab so any changes are recorded and can be easily reverted if something breaks. GitLab-CI and Gitlab Registry are used to build and store the container images for the cluster. This means that everything in the cluster is controlled by code stored in GitLab. Something similar could be achieved if you rolled out Jenkins and Artifactory. Prometheus looks after the monitoring in the cluster and provides data for Grafana to use in nice pretty graphs of CPU usage, network usage, disk usage etc. The latest service that I have added is code-server which is a remote instance of Visual Studio Code that can be accessed through any web browser - very handy if you find yourself switching between machines regularly.

Some people say that Kubernetes is overkill for a homelab and maybe they are right but from my experience this iteration of my lab has been the easiest to manage/maintain and I've had fun doing it. Kubernetes looks after the services. If one service goes down, kubernetes kills the pod and starts up a new one on a different node. There is no configuration of reverse proxies/edge routers as thats all handled by the ingress controllers. Persistent storage can all be managed through Kubernetes too with something like the nfs-client-provisioner. It is also very easy for me to add/remove nodes. If I want to start with a fresh node all I have to do is install docker, kubeadm & kubelet and run the kubeadm join to add it to the cluster. It provides me with an amazing amount of flexibility and reliability. I think its worth it. Give it a try before knocking it - the documentation is very good!

I have to give honourable mentions to Jellyfin and Searx. Jellyfin is a great open source media server with a fantastic development team and community behind it. Searx is a privacy focused metasearch engine that helps to stop you feeding your data to the advertising companies.

The Rest

There are a couple of other bits and pieces scattered around the place on Raspberry Pis. I went for the Pi for the Unifi-Controller as its a well-trodden path as if the WiFi goes down at home I get shouted at. There is also an offsite backup server which consists of the robust combination of a RPi 3B+ and a large USB 3 external harddrive. Its not the fastest thing in the world but it gets the job done.

This all sounds good but then of course it looks like this:

This was just a quick overview of the state of my lab in 2020. If you have questions or any suggestions of self hosted services that I could be running please give me a shout over on Mastodon.

Short Term Future

- Upgrade Helm from v2 to v3

- Upgrade Traefik v1.7 to v2

Medium Term Future

- Move pfSense to a dedicated machine

- Add something like an Intel NUC (or maybe a Ryzen machine) to give me a bit of capacity for spinning up DEV VMs

- Play around with Firecracker

Long Term Future - Who knows?

- All SSD storage with 10Gb networking if the price is right?

- Add an ARM based server